Stop trying to micro-manage the algorithm. The manual controls you relied on for a decade are gone.

For years, ecommerce strategy was defined by "intent harvesting." It was linear, predictable, and controllable.

That linearity is broken.

Today, platforms like TikTok, Instagram, and even Google’s AI Overviews don’t wait for a specific keyword. They predict intent based on behavioral signals and visual context. They operate as predictive matching engines, not just search engines.

This shift has created a massive blind spot for technical marketers.

In this article, we show you how to stop fighting the "black box" and how to start programming it instead.

From Explicit Query to Predictive Matching

Traditional, keyword-based search required a near exact text match. Modern discovery engines, like those in Google Performance Max and TikTok Shop, rely on neural matching and vector search.

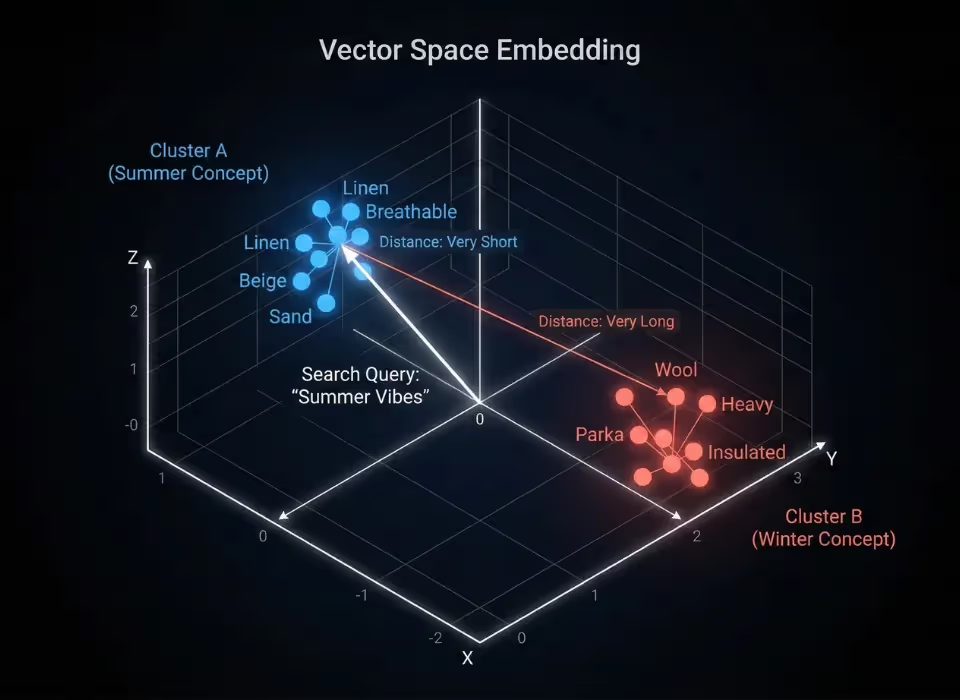

In a vector-based system, the algorithm doesn't just scan for matching words. It converts queries, images, and product attributes into numerical vectors (points in a multi-dimensional space). It then calculates the distance between these points to determine relevance.

This means the engine understands concepts, not just words. It understands that a search for "summer vibes" is semantically close to "linen," "breathable," "pastel," and "beachwear."

The "Invisible" Connection

Consider the mechanics of a modern conversion path:

- Old Way (Explicit): The user searches for men's running shoes, size 10. The ad shows a specific SKU.

- New Way (Predictive): The user watches a marathon training video on YouTube. Google PMax infers "active runner" intent $\rightarrow$ Pushes a running shoe ad in the Discovery feed.

In the second scenario, there was no search query. The "match" happened because the algorithm connected the user's behavioral signal (watching a video) with the product's semantic attributes.

If your product data is sparse—if you lack attributes like product_highlight, custom_labels, or detailed product_detail attributes—your product exists as a weak, undefined point in that vector space. The AI cannot draw a line to the user because you haven't given it enough coordinates to define where you sit.

You are no longer bidding on keywords; you are bidding on audience signals. Your product feed is the translation layer that tells the AI, "This signal matches this product."

Social Commerce Is a Visual Search Engine

On platforms like TikTok, Instagram, and Pinterest, the "search query" is rarely text. The query is the image itself.

These platforms rely on computer vision to index content. The algorithm scans the pixels in your creative to identify objects, colors, textures, and text overlays. It uses this visual data to build a profile of the product and match it with users who have engaged with similar visual patterns.

This creates a fundamental conflict for advertisers who use a "one-feed-fits-all" strategy.

The "White Background" Penalty

Standard Google Shopping best practices demand clean, white-background product photography. This maximizes clarity for price comparison. However, if you syndicate that same feed directly to Meta or TikTok, you are effectively opting out of discovery.

Social discovery algorithms prioritize visual saliency—unique, high-contrast imagery that breaks the pattern and stops the scroll. A generic pack-shot on a white void lacks the visual signals (context, scale, emotion) that social algorithms crave. When you feed a social algorithm a sterile image, it treats the ad as low-quality content and suppresses its reach or charges a higher CPM to serve it.

Fix It with Feed-Based Asset Segmentation

You don’t need to reshoot your inventory. You need to restructure your data flow.

Most ecommerce platforms (Shopify, Magento) store multiple image types. The strategy is to use your feed management tool to map different assets to different destinations:

- Google Shopping Feed: Map

image_linkto your standard white-background URL. - Meta/TikTok Catalog: Use a rule to map

image_linkto yourlifestyle_image_linkor "secondary image" URL.

By segmenting your assets at the feed level, you ensure that Google gets the precision it demands, while Meta gets the "vibe" it requires to fuel its visual matching engine.

Beyond the Image: Structured Data As the New Targeting Lever

When you open a Performance Max or Advantage+ campaign today, you will notice a stark absence: the targeting tab is mostly empty. You can’t dial up bids for specific demographics or granular interests with the precision you once had.

The platform assumes it knows your customer better than you do. But it only knows what you tell it.

In this "black box" environment, the product feed is the only transparent input left to influence targeting. If you treat your feed purely as an inventory list (Title, Price, Image), you are giving the AI the bare minimum. To control who sees your ads, you must shift from "maintenance" to "strategic enrichment."

The "Invisible" Attributes That Drive Discovery

Most marketers obsess over the Product Title. While important, the neural matching engines rely heavily on backend attributes to map products to abstract user intents.

You need to populate the fields that don't always show up on the ad creative but heavily influence the matching logic:

- product_detail: This is where you define technical specifications (e.g., Screen Refresh Rate, Heel Height, Active Ingredient). This allows the AI to match a user searching for "gaming monitor 144hz" to your product, even if "144hz" isn't crammed into the title.

- product_highlight: These are the bullet points that feed the AI summarizers in SGE. Use this space for benefits, not just features.

- custom_label_0 through custom_label_4: Use custom labels to tag products for segmentation. By tagging products in your feed as "Summer Collection" or "Gifts for Him," you can create Asset Groups in PMax that force the AI to respect your merchandising strategy.

Machine Logic vs. Marketing Fluff

One of the most common failures in AI discovery is the disconnect between brand language and machine taxonomy.

Your brand might call a color "Midnight Sky." A user searches for "Dark Blue." The AI might make the connection, but you shouldn't rely on it.

- The Fix: Use feed rules to map your brand-specific color (Midnight Sky for example) to a standardized value within the color (Blue) attribute (or color_map for Amazon), ensuring the algorithm instantly recognizes the base hue.

- The Result: You keep your branding on the frontend, but you give the algorithm the concrete data anchor it needs on the backend.

Automating Context at Scale

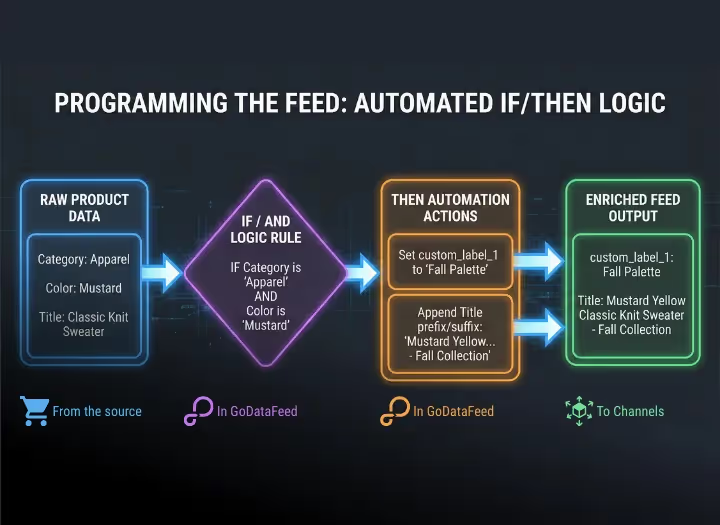

You cannot do this manually for 10,000 SKUs. This is where feed management logic becomes a competitive weapon.

Using a rule-based system (like GoDataFeed), you can create "IF/THEN" logic to enrich data dynamically:

IF title contains "Gore-Tex" AND category is "Jackets" THEN append "Waterproof" to product_highlight AND set material to "Gore-Tex".

This instantly transforms a raw inventory line into a rich, attribute-dense asset that is primed for discovery.

Feed the Engines that Power Discovery

The platforms have built incredible discovery engines—neural networks capable of predicting intent before a user types a single word. But if it only has low-grade crawl data to run on, the engine stalls. It defaults to broad, inefficient matching because it lacks the precision to do otherwise.

If you provide high-octane, structured, attribute-rich data, the engine outperforms manual management every time.

Start by auditing for "invisible attributes." Empty fields. Generic values. A lack of context.

Stop guessing what the algorithm sees—and start controlling it. Request a forensic feed audit to uncover the hidden data gaps costing you visibility.

.png)