Optimization for Large Language Models is effectively optimization for Retrieval-Augmented Generation (RAG). In this new model, the quality of the answer depends entirely on which “chunks” of content the AI can retrieve—and that depends on how closely your product vectors align with the user's query.

To optimize your product feed for 2026, you need to understand the RAG architecture, which powers AIs like ChatGPT, Perplexity, and Gemini.

Understanding RAG is critical because it reveals exactly how an LLM retrieves data. It is effectively the "ranking algorithm" of the AI era.

Inside the RAG Loop: Retrieval First, Answers Second

When a user asks a specific question—"What are the best trail running shoes for wide feet under $150?"—the LLM does not rely solely on its pre-trained memory (which is static and often outdated). Instead, it performs a two-step process:

- Retrieval: The model queries a database of trusted, external information (like a product index or vector database) to find relevant "chunks" of text. It looks for vectors that match "trail running," "wide fit," and "budget-friendly."

- Generation: It combines the user’s question with those retrieved chunks to generate a natural language answer.

If your product data is sparse, the retrieval step fails. If you sell a wide-width trail shoe but your feed only says "Men's Runner - Blue," the vector similarity score will be too low. The system will skip your product and retrieve a competitor whose structured data explicitly tags "Width: 2E" or "Feature: Wide Toe Box". This feed data must flow to your storefront Schema to be visible to web crawlers like Perplexity and indexed correctly by Google's Shopping Graph.

When retrieval fails, the LLM either excludes you entirely or, worse, it hallucinates.

This creates a new economy where product data must be concise but dense with meaning. Fluff marketing copy is noise. Explicit attributes (Material, Activity, Fit, Compatibility) act as distinct entities and signals.

From Long-Tail Keywords to Semantic Coverage

AI search is changing consumer behavior. Users are increasingly asking LLMs to recommend products based on complex, conversational needs, not short keyword phrases. If your product data lacks semantic density, it will remain invisible. An LLM can only retrieve and generate answers from information it can actually interpret.

In other words, the skills ecommerce marketers built mastering long-tail keyword optimization still matter—but the objective has shifted. Instead of capturing every query variation, the goal is to fully describe the product’s functional reality so the AI can match it to intent, even when the user never uses industry terminology.

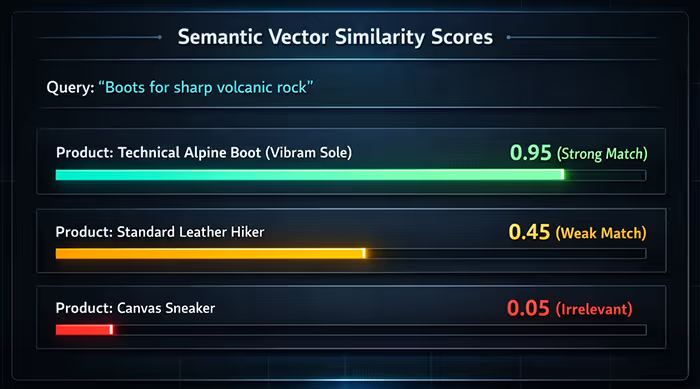

As an example, let's look at how the LLM compares to traditional search for this query:

"I need boots for a 7-day trek over sharp volcanic rock while carrying a 50lb pack."

Traditional Search:

Scans for the exact keywords "7-day" and "volcanic rock." It likely returns any product with "rock" or "trek" in the name, often missing the technical intensity of the request.

LLM (AI Search):

Understands that "sharp volcanic rock" requires high-abrasion resistance and specialized traction. It also infers that a "50lb pack" on a multi-day trip necessitates high ankle support and a stiff midsole to prevent fatigue—matching the query to the concept of a technical backpacking boot rather than just a "hiking shoe."

The AI's retrieval system identifies products tagged with 'Outsole: Vibram Megagrip', 'Rand: 360-degree Rubber', or 'Shank: Stiff/Backpacking', even if the user never used those technical terms.

This opportunity, however, comes with a prerequisite.

The LLM can only make this connection if the underlying data exists. If your product is perfect for sub-zero temperatures but you haven't explicitly tagged it with "Insulation Level" or "Temperature Rating" in your feed, the LLM cannot bridge the gap.

What This Demands from Modern Feed Strategy

LLM visibility doesn’t reward clever copy. It rewards complete representation.

Your data feed should leave no ambiguity about what the product is for, who it’s for, and under what conditions it performs best.

We help ecommerce brands and agencies adapt their feeds so they remain legible, complete, and competitive in AI search. Book your free consultation today.

.png)