Traditional search engines act like a card catalog, looking for exact matches.

Large Language Models act like a librarian who has read every book and recommends one based on the plot you describe. That’s because LLMs retrieve information based on patterns of meaning and context, rather than exact query string matching.

In this article, we look at the principles that govern those contextual associations and uncover the contextual relationships that determine how AI sees and surfaces products.

Making the Connection for AI

AI doesn't see words, images, or sounds the way humans do. Instead, AIs turn that data into a number string called a vector.

A vector is the LLM’s internal understanding of what a brand or product represents, built from how and where it’s talked about across the web.

LLMs use the Cosine Similarity method to compare vectors, determining whether a product or brand is relevant by checking how closely its meaning aligns with a query—not by matching words, but by matching context.

In other words, cosine similarity between vectors is how the system determines what your products mean. It’s those vector relationships that determine whether your product is considered relevant to a given query and included in the response.

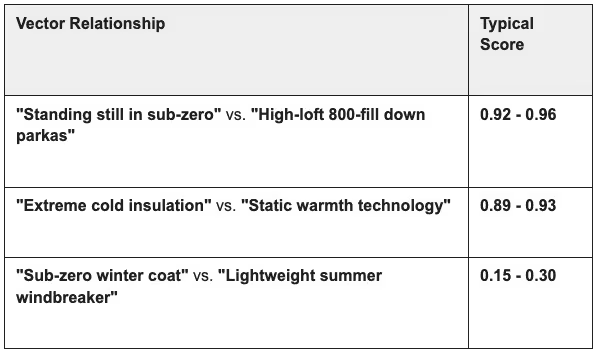

Consider the search query: "I need a jacket for standing still in sub-zero temperatures."

Traditional Search: Looks for the keywords "standing still" and "sub-zero." It likely fails or returns irrelevant results.

LLM: Uses vector embeddings to recognize the intent behind your words. It understands that "standing still" implies a need for high insulation (static warmth) rather than breathability (active warmth). It infers that "sub-zero" requires specific material grades (e.g., 800-fill down).

Example vector relationships:

The old SEO playbook was all about keyword density, but AI has changed the rules. To stay relevant, marketers need to pivot toward "concept density" by focusing on the depth and context that enables accurate vector embedding.

Keyword SEO Was Built for Indexes. AI Search Is Built for Understanding.

For the past 15 years, ecommerce SEO has relied on a single dominant logic of the Inverted Index, which essentially directs the search engine to work like a glossary.

If a user searches for "waterproof hiking boots," the search engine scans its database for product titles and descriptions containing the strings "waterproof," "hiking," and "boots". If your product data contains those specific keywords, you are indexed. If you have the right bid and historical performance, you rank.

As we’ve already discussed, AI search doesn’t work this way.

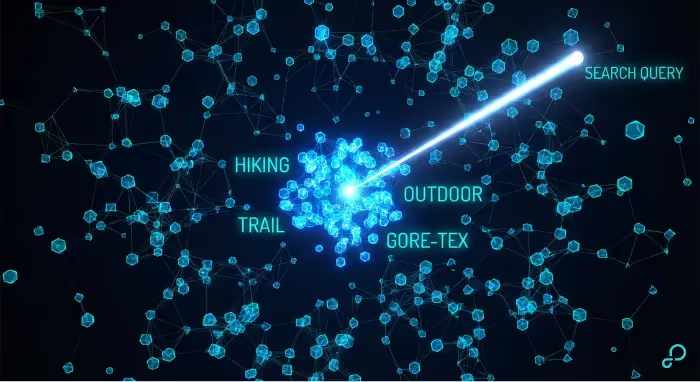

When an LLM processes your product data, it doesn't just store the words; it converts your product information into a high-dimensional vector that represents the semantic meaning of that product in a multi-dimensional mathematical space.

In this space, "concept A is mathematically similar to concept B".

The LLM knows that "GORE-TEX" is close to "waterproof," which is close to "rain," which is close to "Pacific Northwest."

This is why traditional keyword optimization fails in LLM environments. An LLM doesn't "search" for your keywords; it retrieves vectors that are semantically close to the user's prompt.

If your data is sparse—if it lacks the descriptive attributes that give it semantic weight—your vector is weak and therefore the LLM cannot "see" the relationship between your product and the user's intent.

Even if you have the keyword "boot" in your title, if you lack the contextual data points that define what the boot is for, the retrieval fails.

Some marketing teams still apply old SEO logic (keyword density) to this new tech, assuming that mentioning "running shoe" more times makes it more relevant.

In the vector world, repetition offers diminishing returns. What matters is the concept density—providing enough explicit data points for the LLM to map your product to the correct area of its internal map and surface it for relevant queries.

Get an AI Feed Expert in Your Corner

Navigating the shift from keywords to vectors doesn't have to be a guessing game. If you are wondering whether your current feed is ready for the AI era—or just where to even start—you don't have to figure it out alone.

Let’s take a look under the hood together. Book a free consultation to review your data strategy, identify your gaps, and get clear answers on how to position your catalog for discovery.

No strings attached—just a smarter path forward. Book your free consultation today.

.png)