If your product data is “good enough to get approved,” it’s probably costing you money. Approval isn’t the bar—performance is.

How your products show up, how often they’re served, and how efficiently you can bid on them — that’s all dictated by the structure and precision of your feed data.

You won’t catch this in your Merchant Center warnings, but you’ll see it in your ROAS decay. Campaigns underdeliver, asset groups blur together, and marketplaces throttle visibility — all because the data was “fine” but not structured for the way platforms parse and prioritize products.

This is a feed strategy hiding in plain sight.

The Real Cost of Dirty Data: Eligibility Loss and Feed Suppression

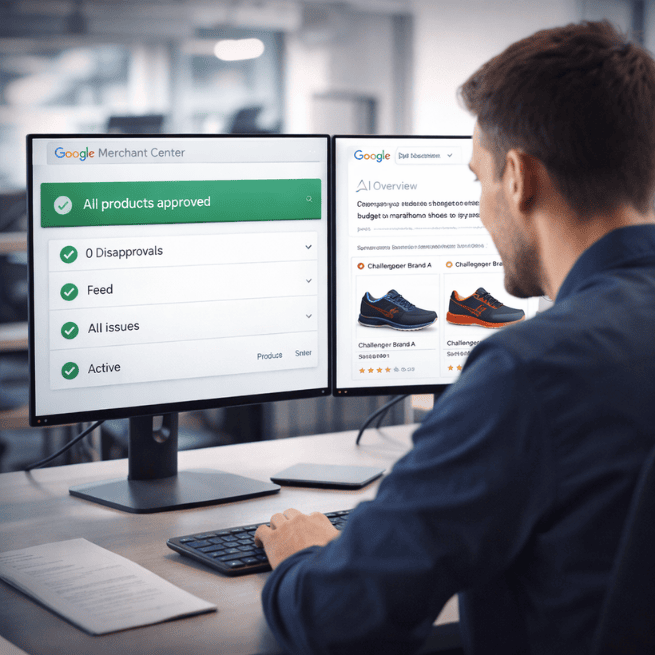

Ever checked your Merchant Center diagnostics, seen “All Products Approved,” and still watched your impressions flatline? That’s feed suppression.

Platforms don’t need to disapprove a product to tank its visibility. If your data is messy, incomplete, or structured incorrectly, they’ll just throttle it in the background. No alerts. No errors. Just fewer impressions and wasted ad spend.

GTIN mismatches are a classic example. Drop a leading zero, mix up an EAN with a UPC, and Google won’t always flag it. But your product silently loses eligibility in key markets or gets sidelined in Shopping auctions.

Meta plays this differently but with the same penalty. Poorly structured titles or missing variant data? Advantage+ Catalog Ads will suppress the group. Meta’s logic defaults to showing just one variant, and it’s rarely your bestseller.

If you’ve seen a product set where one variant hogs all the spend while the others sit untouched, this is why. Meta can’t optimize what it can’t interpret.

Here’s where most teams get tripped up: they think “Approved” means “Ready to perform.” It doesn’t. Platforms parse feeds to decide what gets surfaced, where, and when. If the data doesn’t tell a clear story, your products lose auctions you’ll never even know you missed.

How Product Data Structure Controls Campaign Logic in PMax and Advantage+

Google and Meta don’t just read your feed data — they interpret it to fuel their campaign logic. If your structure is shallow, the automation has nothing to work with.

Take Performance Max. product_type and custom_label attributes aren’t filler fields — they’re the scaffolding PMax uses to cluster products in asset groups. If your product_type is flat (“Apparel”), Google has no signal to distinguish premium items from budget basics. You end up with asset groups that mix $200 jackets with $20 tees — and your bidding strategy gets sloppy.

We’ve seen it happen: a retailer’s bestsellers get lumped in with clearance stock because the product_type hierarchy was too generic. That’s budget bleeding before the campaign even starts optimizing.

Meta’s Advantage+ Shopping Campaigns face the same gap. The platform uses product category and custom attributes to pair products with creative dynamically. If your data is incomplete or inconsistent, Meta either guesses — or skips pairing altogether. That’s how dynamic ads end up with mismatched products and creative, killing CTR.

What to do instead:

- Use GoDataFeed rules to build deep, hierarchical product_type structures that mirror your site’s category tree: Apparel > Women’s > Dresses > Maxi.

- Layer in custom labels for margin segmentation, seasonal pushes, or lifecycle stages like “New Arrival” or “Clearance.”

- Standardize attribute population — no product should carry blanks or placeholders.

Feed structure isn’t just data hygiene — it’s the architecture that platforms use to align products with audiences, bids, and creatives. Weak structure = weak segmentation = wasted budget.

Two Common (but Hidden) Data Issues That Drain Campaign Performance

Even well-structured feeds can carry data issues that quietly erode campaign performance. These rarely show up in platform errors, but they show up in weak ROAS, cannibalized impressions, and poor variant coverage. An analysis of common data‑feed errors in Google Shopping, even when diagnostics reports show all products approved, demonstrates just how prevalent and persistent these hidden issues can be. Read the full report here.

Two issues are especially common:

1. Mixed Variant Data Structures

When variant details like size and color are inconsistently placed — sometimes in titles, sometimes in attributes, sometimes missing — platforms can’t reliably group or differentiate them.

On Meta, this causes Advantage+ Shopping to suppress most variants, defaulting delivery to just one option in the set. On Google Shopping, inconsistency creates duplicate detection issues, where similar SKUs compete against each other instead of the market.

The fix is standardization. Size and color should be consistently appended in titles, reinforced in structured attributes, and echoed in descriptions. Feed tools with rule-based transformations make this scalable without engineering support, especially when managing multiple catalogs or brands.

2. Legacy Category Mapping

Outdated Google Product Categories are a common liability. If categories haven’t been refreshed to match Google’s evolving taxonomy, automated targeting in PMax becomes blunt and imprecise.

The consequence: your products end up in the wrong auctions, or worse, don’t surface at all when query relevance is tight.

Updating product categories to align with the latest taxonomy restores targeting precision. Adding custom labels for attributes like margin tier, lifecycle stage, or promotional status further sharpens how products are segmented and bid on, before campaigns even launch.

[For a comprehensive guide on setting up your feed and optimizing categories, refer to this All-in-one Feed Setup Overview Guide - GoDataFeed Help Center.]

Clean Feeds Are Easier (and Cheaper) to Optimize Downstream

Weak data forces you to compensate with campaign hacks — negative keyword sculpting, heavy audience layering, constant creative swaps. That’s time and budget spent covering for a feed that should’ve been fixed upstream.

When brand names aren’t normalized — “Nike,” “NIKE,” “nike” — Shopping campaigns fragment across inconsistent search queries. PMax asset groups split similar products, scattering spend where it should be consolidated.

On Meta, sloppy naming weakens lookalike audience quality. The platform cross-references product data to model audiences — messy inputs give you noisy, underperforming outputs.

A clean base feed also makes supplemental strategies easier to deploy. You can layer in promo-specific data, like seasonal sale flags or time-limited drops, without risking overwrites to your core data. That’s the difference between executing a flash sale in hours vs. rebuilding the feed structure under pressure.

Good data doesn’t just improve targeting — it keeps your ops team out of the weeds.

If You’re Managing Feeds at Scale, Here’s How to Keep Your Data Tight

Once you’re managing hundreds or thousands of SKUs, keeping product data clean isn’t optional — it’s the only way to prevent small issues from scaling into performance problems.

Here’s the workflow that keeps feeds in shape:

- Schedule recurring feed audits to catch duplicate GTINs, empty fields, and formatting inconsistencies across variants.

- Set feed rules that enforce:

- Title formatting that leads with the product keyword, followed by variant details like size or color.

- A consistent product_type hierarchy deep enough to reflect real category structure — not just “Apparel” or “Electronics.”

- Custom labels for critical segmentation points like “High ROAS,” “Clearance,” “Seasonal Push,” or “Q4 Priority.”

[For advanced text manipulation within your feed, explore Feed rules: How to use Text Replacement - GoDataFeed Help Center.]

Then close the loop: track campaign metrics like asset group ROAS or Shopping CTR against these data updates. If you’ve restructured product_type or refreshed custom labels, performance shifts will show up fast — if you’re watching.

Most teams skip this. The feed gets approved, it runs, and nobody checks back until ROAS slips. By then, fixing the data takes longer — and costs more — than it should’ve.

Data Structure Isn’t Set-and-Forget—It’s an Always-On Performance Lever

Platform requirements don’t sit still. Meta adjusts its Commerce taxonomy. Google updates Product Categories. New ad formats like PMax for Retail shift, which attributes, drive eligibility and scale. If your data structure isn’t reviewed regularly, performance degrades — quietly and expensively.

Treat feed optimization like campaign optimization: it needs a maintenance window. Every quarter, audit your taxonomy depth, product categorization, and custom labels against platform standards.

If you’re running multichannel — Google, Meta, Amazon, Walmart — build reusable feed rule templates in GoDataFeed. That way, you can adapt feeds by channel or season without rebuilding from scratch every time the platforms shift requirements.

This is how you keep feeds adaptive without the usual drag on your ops team.

The Bottom Line

Bad data won’t show up in your error reports — it shows up in your ROAS. Campaigns lose efficiency, asset groups blur, and products get quietly sidelined by the platforms.

Structured, precise product data is the upstream fix that restores campaign performance.

Action Step:

Start with a diagnostics sweep in your Merchant Center, Commerce Manager, Seller Central, etc. Then audit your feed in GoDataFeed to surface the gaps the channels don’t catch — mismatched GTINs, shallow product types, and missing custom labels. That’s where the performance leaks usually start.

%20What%20Merchant%20Center%20Checks%20Every%20Time%20Your%20Prices%20Change.png)