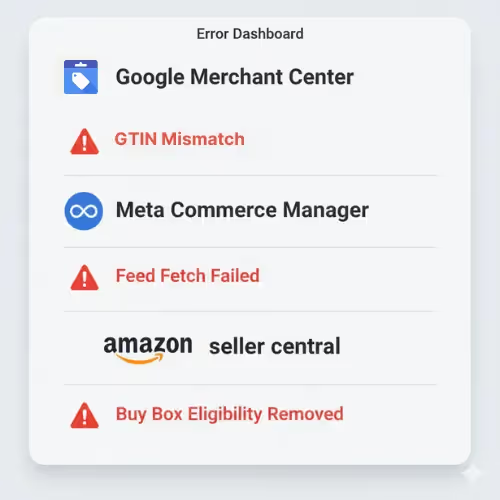

When your feed price doesn’t match what’s on your PDP, Google and Meta throttle delivery or suspend items entirely.

The result?

- Up to 40% drop in impression share

- Disapproved Shopping ads

- CPCs spike as trust score drops

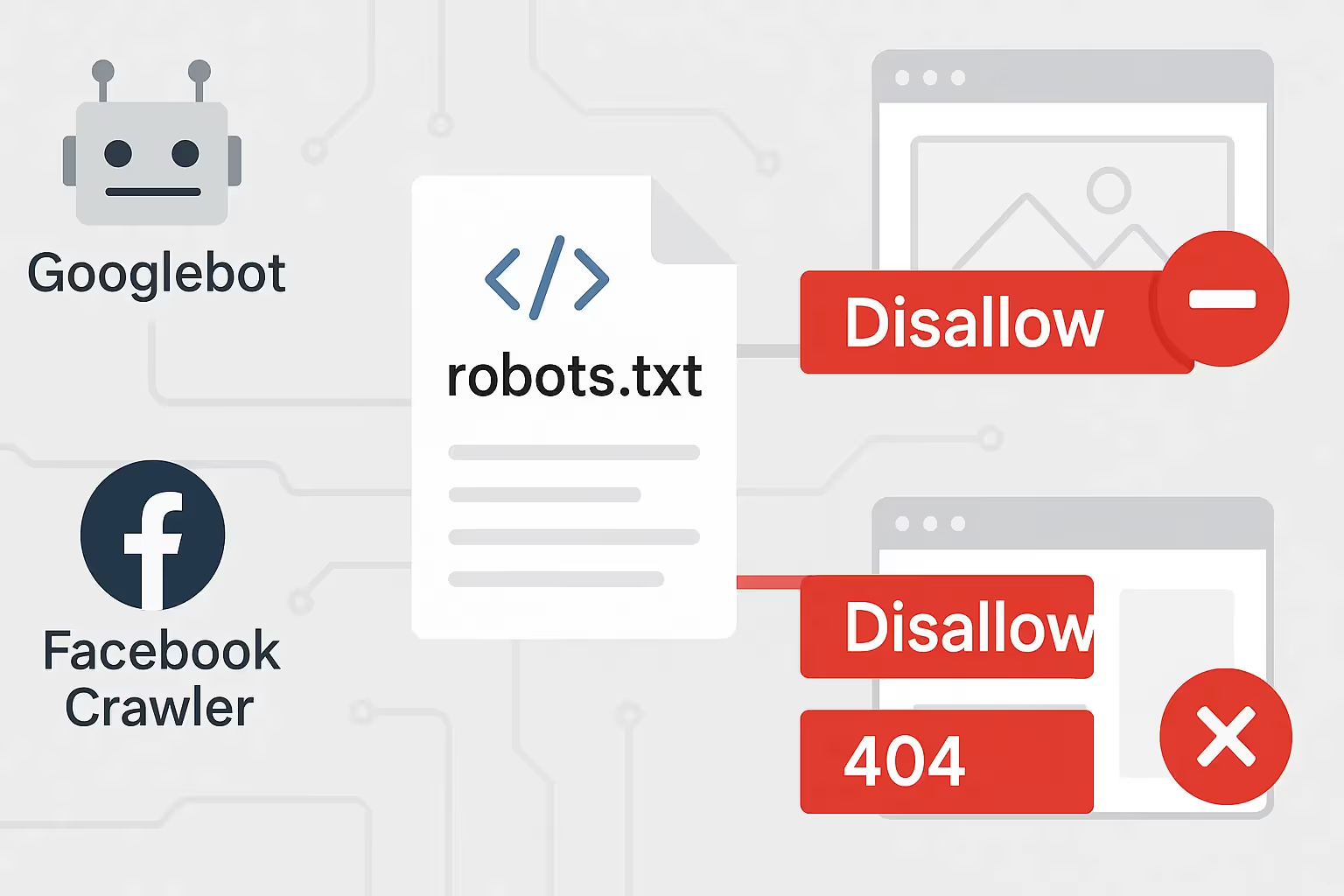

Real-world scenario: a flash promo updates your site price, but your feed only refreshes nightly. Google crawls mid-day → flags mismatch → disapproval. You don’t find out until ROAS tanks.

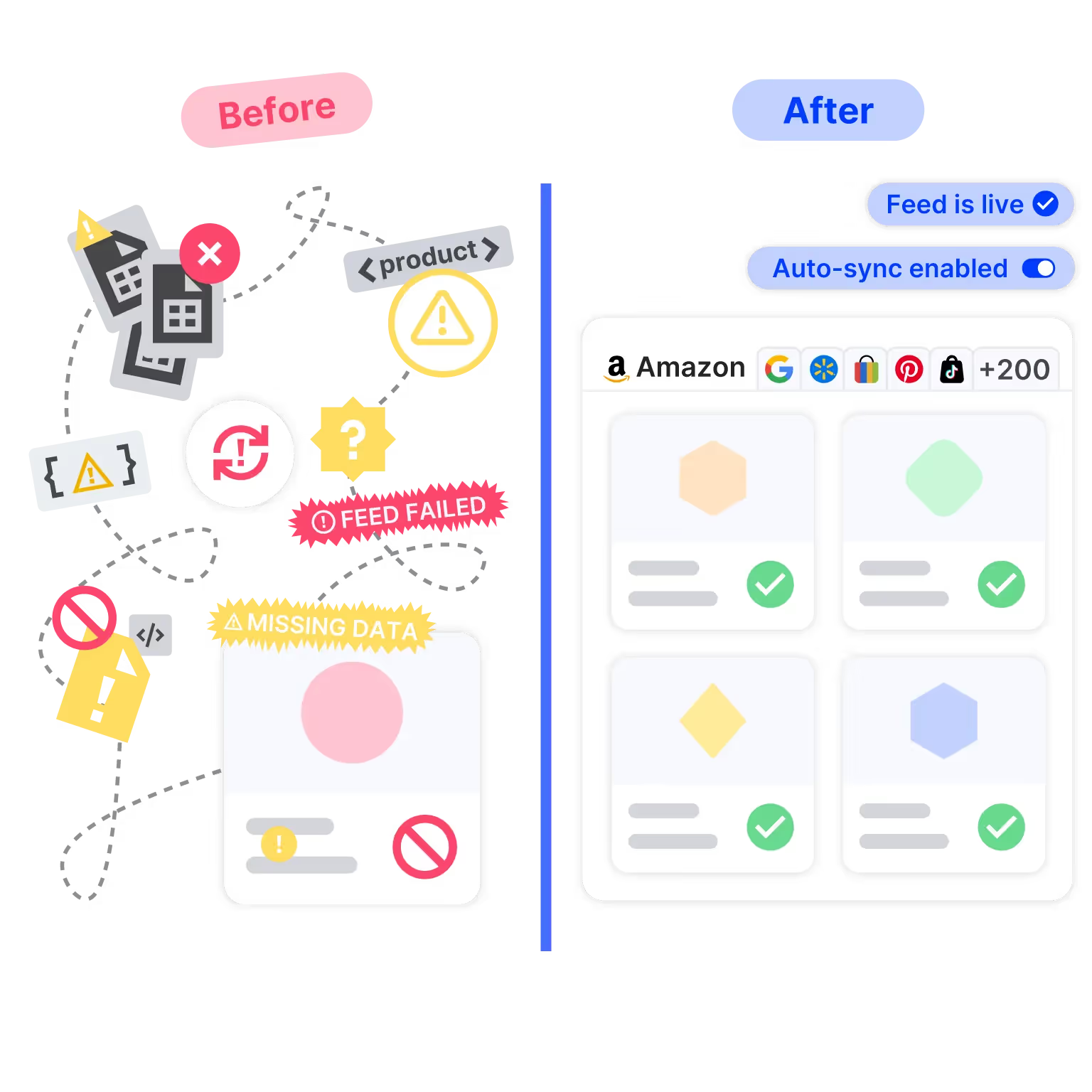

Fix: Automate delta feeds every 15 minutes with pre-submit validation. Platforms like GoDataFeed make this a non-issue.

Why does Google disapprove my products for price mismatches—even when the price is correct?

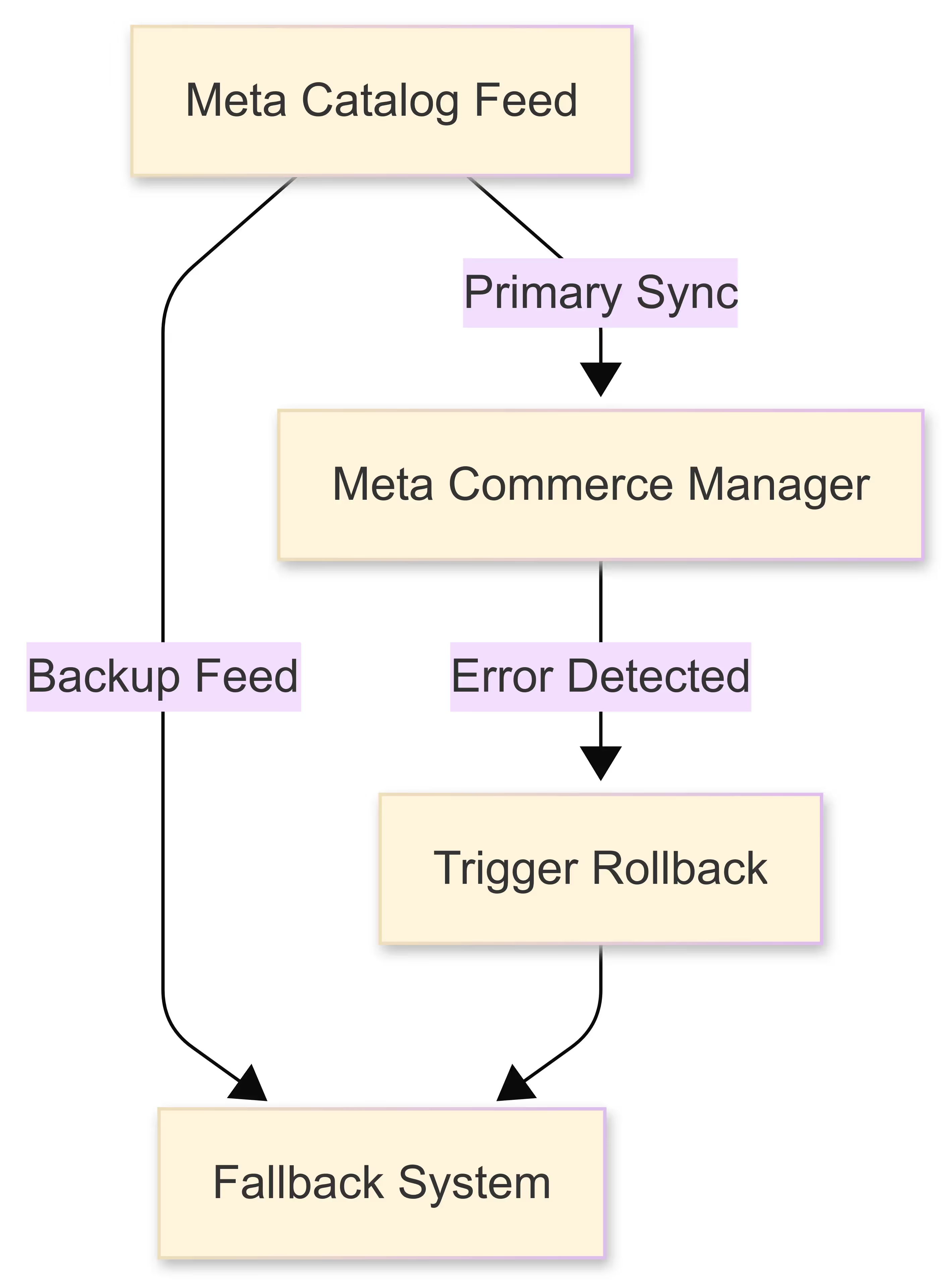

When Google or Meta detect that the price in your feed doesn’t match the price on your product landing page, they treat it as a trust violation. They may suspend that SKU, lower its priority, or even halt your entire Shopping or dynamic ad group. For a DTC brand in the $1M–$10M range, that allocation blow can cascade: you lose visibility for your best SKUs, waste budget, and confuse bidding algorithms.

This often happens when promotions, discounts, or flash sales update your website immediately, but your feed refresh runs on a delayed schedule or in batches (say nightly). That mismatch window becomes a blind zone where your best ads are flagged or disapproved before you even know it. Imagine running a big sale Friday evening—your site shows the discount, campaign launches, but your feed is still pushing the old price. Bam: disapprovals, cancellations, lost momentum.

The fix is to automate delta updates (only changed SKUs) at frequent intervals (e.g. every 10–15 minutes), and inject a validation layer that checks your feed vs. landing pages before submission. That way, mismatches get caught offline rather than being penalized. In essence: let your feed engine act as a gatekeeper rather than a helpless messenger.